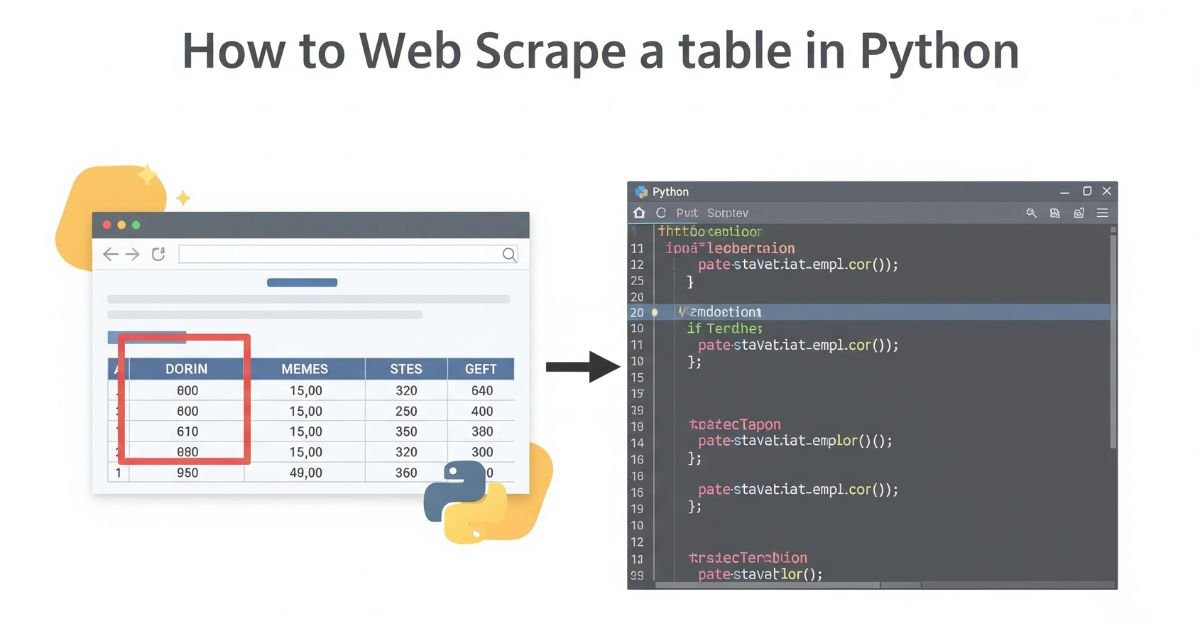

Learning how to web scrape a table in Python puts some serious muscle behind you. You don’t have to copy data manually anymore. You can extract data straight from websites and use it for analysis, reports, or projects. Tables are like gold—stock quotes, sports statistics, job postings, product information, and more.

This tutorial shows you everything there is to know about how to web scrape a table in Python with examples and working code. You’ll understand the tools, tackle problems, and get useful results. We’ll keep things simple. No tech talk. Just clear steps and easy words. Let’s dive in.

Why Scraping Tables Is So Useful

Web tables often hold structured data—ready for you to collect. But copying them one row at a time is a nightmare. If you’ve done that, you know the pain. Web scraping makes this easy and fast. Think of it as teaching Python to be your data assistant.

With how to web scrape a table in Python, you can gather loads of info in seconds. You can turn online tables into CSV files or Excel sheets. It helps researchers, analysts, students, and developers save time and work smarter.

Read More About : How to Get SQL Server Version (Accurate, Fast, and Verified)

What You Should Know First

Before you start scraping, you need some basics. You should know Python, how HTML works, and what tags like <table>, <tr>, and <td> mean. These are the building blocks of any online table.

It’s also important to scrape legally. Not every website allows it. Some have rules. Check their robots.txt file to know if scraping is blocked. Respect sites, avoid spamming, and don’t break the law.

The Tools You’ll Need

To follow how to web scrape a table in Python, you need the right tools. You’ll install libraries to download and process web pages. These tools make scraping simple.

Here’s what each tool does

| Library | Use | Install with |

| requests | To fetch webpage content | pip install requests |

| BeautifulSoup | To parse and search the HTML | pip install beautifulsoup4 |

| pandas | To clean and save the table data | pip install pandas |

Find the Right Table on the Page

Many websites have more than one table. So first, you need to locate the one you want. Use your browser’s “Inspect” tool. Right-click on the table and look for the tag <table>.

See if it has an id, class, or special feature. If not, you’ll need to look at all the tables and choose the one that fits your needs. This step is key. If you pick the wrong one, your whole script will fail.

Download the Page Content

You can’t scrape what you don’t have. Start by getting the full HTML of the page. Python’s requests library helps you do that fast. But some sites block bots, so add headers to look like a real browser.

Here’s the idea in plain words. You ask the site for a copy of its page. If the site agrees, it sends back the full HTML.

Parse the HTML to Find the Table

Once you have the page, the next step in how to web scrape a table in Python is to read that HTML and find your table. That’s where BeautifulSoup comes in.

You’ll search for all <table> tags. If there’s more than one, you select the one that matches your target. This tool lets you navigate the web page like a map. It points straight to your table.

Extract the Table Rows and Columns

Now you’re close. The table is in sight. You just need to grab each row. Then you grab each cell from the row. That gives you the actual data you want.

Think of it like a grid. You read each line, pick out the values, and save them in a list. You’ll use tr for rows and td for cells. Now your data is clean and ready to use.

Turn Your Data into a DataFrame

Once you’ve got the table in list format, it’s time to make it beautiful. You use pandas to turn it into a DataFrame. That’s just a smart table that Python can work with easily.

From here, you can filter, sort, and save the data. Want it in a CSV file? One line of code does it. Now your scraped data is ready for Excel, reports, or charts.

What About Complex Tables?

Some tables have merged rows or columns. Others span across several pages. These are harder. But you can still win. You need extra logic for cells with rowspan or colspan. Break them into smaller parts.

Paginated tables are another challenge. You’ll need to loop through pages using URLs or buttons. Always test with a small sample first. That helps you avoid mistakes.

You May Like : How Hard Is It to Learn SQL?

Full Example You Can Try

Let’s say the site you want has just one table. You use requests to get the page. BeautifulSoup to find the table. Then loop through each row and turn it into a DataFrame.

Here’s a table that shows each step

| Step | Tool Used | Goal |

| Get page | requests | Download HTML |

| Parse HTML | BeautifulSoup | Find the table |

| Extract rows and cols | Python loops | Pull clean data |

| Save as DataFrame | pandas | Organize and export |

Common Errors and How to Fix Them

Sometimes your scraper won’t work right away. Maybe the table isn’t found. Or maybe the file is empty. Don’t panic. It happens to everyone.

Here are some typical issues

| Problem | Reason | Solution |

| Table not found | Wrong index or dynamic content | Use find_all() or Selenium |

| Blank rows | Wrong tags used | Use both td and th |

| Unicode errors | Special characters | Set encoding to UTF-8 |

| Site blocks request | No headers | Add user-agent headers |

Tips to Scrape Smarter

Good scrapers follow rules. Don’t hammer a site with hundreds of hits per second. That gets you blocked. Add delays between requests. Randomize headers if needed.

Use functions to keep your code clean. Test your scraper often. Websites change. Your code needs to change too. Back up your data regularly, and always label your files properly.

How to Handle JavaScript-Loaded Tables

Some tables won’t show up even when you inspect the page. You look in the HTML, but there’s no data. That’s because the site uses JavaScript to load the table after the page opens. This breaks the normal scraping method.

To deal with this, you use Selenium. It opens the page like a real browser, waits for everything to load, and then gives you the full HTML. It takes longer but works great for dynamic websites. You can also set wait times to ensure the table appears before scraping.

Automating Your Web Scraper

Scraping once is good. Scraping daily is better. Maybe you want daily exchange rates, weather updates, or job listings. You can schedule your scraper to run every morning using task tools.

On Windows, use Task Scheduler. On Mac or Linux, use cron jobs. These tools run your Python script at fixed times. Just make sure your code runs clean, logs errors, and saves files with unique names like

Real-World Use Cases of Table Scraping

Now let’s look at where you might use how to web scrape a table in Python in real life. This isn’t just a cool skill. It solves real problems.

| Field | What You Can Scrape | Use Case Example |

| Finance | Stock prices, earnings reports | Daily stock tracker |

| Education | University course lists | Comparing programs from different colleges |

| Sports | Match scores, player stats | Fantasy league data collection |

| Travel | Flight prices, hotel listings | Budget trip planner |

| Job Market | Job boards, company listings | Job alert system |

All these can be automated. That’s the beauty of scraping.

When to Use APIs Instead of Scraping

Sometimes scraping isn’t the best tool. Some websites offer public APIs. These are made for developers and often return clean, ready-to-use data. APIs are more stable, legal, and easier to work with.

Before you scrape, always check if an API is available. For example, sites like Twitter, YouTube, and some government portals offer APIs. But if the data is locked in a table, that’s when you apply how to web scrape a table in Python.

Ethics and Responsibility of Web Scraping

Even though scraping feels easy, you must always act with respect. Don’t overload servers, bypass blocks, or steal data from paid services. Websites have rules for a reason. Violating them can lead to legal problems or being banned.

Always give credit, don’t resell scraped data without permission, and avoid scraping personal info. Use your scraper for learning, research, or building tools—not for shady stuff.

FAQ”s

Is it legal to scrape any website?

No. Always check the website’s policy. Some sites block scraping in their terms.

What if the table is loaded with JavaScript?

Use Selenium or Playwright to wait for the full page to load before scraping.

Can I scrape a login-protected table?

Yes, but you must log in first using a session or automation tools.

How do I avoid getting blocked?

Use headers, slow down requests, and add delays between hits.

Can I scrape more than one table at once?

Yes. Use find_all(‘table’) and loop through each one you need.

Conclusion

Now you know how to web scrape a table in Python. You’ve learned tools like requests, BeautifulSoup, and pandas. You’ve seen how to clean, save, and export data. You’ve even handled complex tables. Web scraping is like data hunting—you’ve got the map, tools, and skills. Just keep practicing, follow the rules, and you’ll master it.